In cyber security circles, the term AI1 means lots of things to different people. But when we talk to customers, we find the term is overused and often misunderstood. So, let’s explore what exactly AI means to cyber professionals, and how it can improve your SOC efficiency and bring value to security teams.

The next phase of our existence is going to require figuring out how best to get along with smart machines. We see so many movies about how machines are going to take over – but the reality is that humans are already collaborating with machines, and their influence will continue to accelerate. We need to understand how humans and smart machines create value together when it comes to security operations – keeping people, processes, and organizations safe.

Cyber security strategy is an area being disrupted by today’s changing technology landscape. The threat landscape is expanding rapidly, as are the number of connected systems that need to be protected. Humans alone can’t keep up with the demand. Most of the innovations in AI only exacerbate the problem – creating more alerts for an already overburdened team of human analysts. This becomes the new frontier: How do humans work together with smart machines effectively to solve this problem?

In this post, we’ll look at how AI can augment the capabilities of human analysts to increase the effectiveness of computer security incident management and accelerate detection to reduce cyber security threats to the organization.

Trends in Cyber Security and AI

In the last year, there has been tremendous investment in AI. We have seen that enterprises are no longer just talking about Robotic Process Automation (RPA) or discussing using algorithms to understand customers better – but actually making the shift to implementing AI.

Enterprises are no longer just talking about RPA or discussing using algorithms to understand customers better – but actually making the shift to implementing Al.

One example of this growing activity surrounding AI is Microsoft’s announcement earlier this year of a $1 billion investment in OpenAI, in a new, multiyear partnership. In fact, there is an estimated 72% industry-wide, year-over-year uptick for funding in AI and machine learning.

According to Deloitte, one result of this is that, as organizations increase their use of AI, they are defining new “superjob” roles for humans – because “standard jobs” have been taken over by machines.

Worldwide information security spending is expected to exceed $124 billion in 2019, according to a Gartner forecast. However, it’s not just a question of increased spend; the key is getting the right data and applying it to the right problems.

AI in the SOC: Impacting Alert Triage and Incident Response

When it comes to security operation centers (SOCs), there are 2 key roles: Alert Triage (L1) and Incident Response (L2).

How AI Will Impact Alert Triage (L1)

Alert Triage (Level 1) teams are responsible for examining alerts and then escalating those that may indicate an attack. If you drill down on the work done by an L1 Analyst, most of their work really involves enriching alerts and understanding the context of the cyber security risk assessment. For example, resolving whose IP address this belongs to, identifying whether there’s a vulnerability in the system, and what network a device may be part of.

There are a number of enrichment tasks that are going on, and most of these can be done automatically. When we talk about AI, we use buzz words like false positives and false negatives – however, the bottom line is that the L1 Analyst today enriches alerts to try to definitively decide whether or not to escalate. Enrichment enables the L1 Analyst to get definitive answers – and most of these same activities can be performed by a smart machine.

Today, there are too many alerts for cyber professionals – and more will be coming. Our focus should be on enriching these alerts automatically and escalating them to expert teams for decisions – then dealing with response and containment.

Our analysis has demonstrated that 80% of an L1 Analyst’s workload involves manually enriching alerts. This could be performed faster by virtual agents (AI bots) – allowing security analysts to focus on threat hunting, and proactive cyber security risk assessment to identify attacks and identifying exploits for which detection rules do not exist.

How AI Will Impact Incident Response (L2)

Once one or more alerts have been escalated, the incident must be resolved, contained, and the threat actor must be eradicated from the systems. The question here is: How can AI improve the speed of incident response? The speed with which an organization detects and responds to an attack should be directly related to the magnitude of potential loss over time.

The problem is that knowledge of attack techniques, the IT environment, and vulnerabilities is too vast and too dynamic for any human to learn and process. Machines, on the other hand, can process large amount of data and perform complex processes (algorithms) faster – and can assist humans in knowing where to look and prioritizing next steps. These areas of knowledge can be brought together quickly to aid the human decision-making process.

With the assistance of AI bots, we can re-focus human efforts at the L2 level on highly probable, high risk attacks – but it’s still the cyber experts who must make the final call.

Mapping the Human Mind: Human Functions vs. Machine Functions

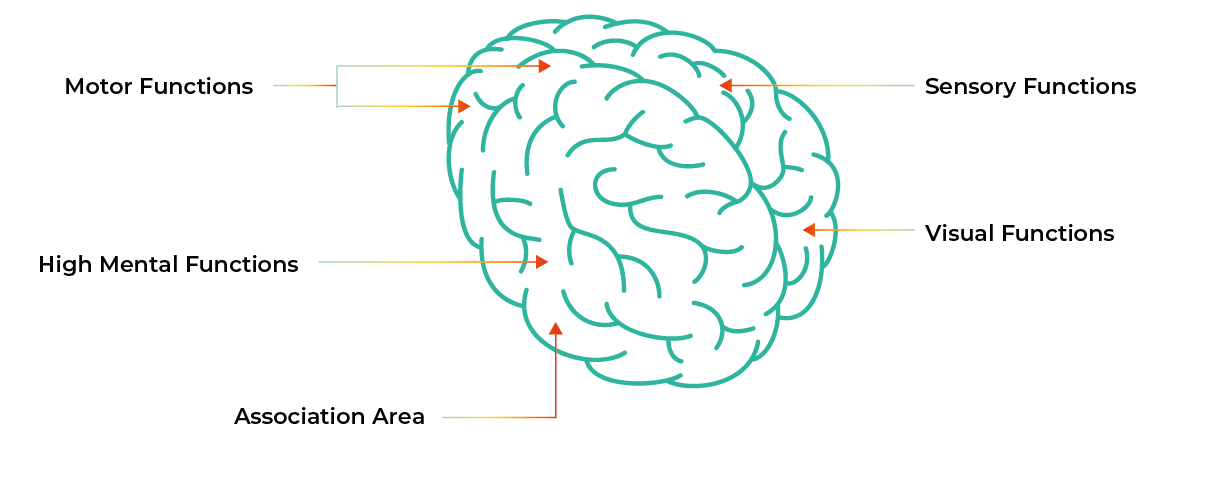

Since human security analysts are overloaded with alerts, our focus must shift to finding ways to leverage the power of machines in security operations – thereby freeing up human analysts to do the more creative jobs that machines are unable to perform. To explain this idea, we decided to look at the human brain. By examining and breaking down the different functions of the human brain, it becomes easier to understand how AI can assist in security operations.

The functions of the brain are shown below:

Sensory functions, i.e., the capability of touch – sensing exactly what’s happening around us. In terms of security operations, we have logs (processing discrete events), SIEM (rules), use behavior analytics (ML), network and device behavior (using ML to identify anomalies), and anti-malware (DL) – and our job is to interpret these different signals. In the context of the SOC, as we continue to receive more alerts, we need to determine which ones are important, put context around them and detect patterns. That’s what the human body does. Bottom line: We need to leverage AI to put more focus on making sense and enriching the alerts that come in.

Motor functions, aligned to muscle memory. After doing something multiple times, we act automatically. As this relates to a SOC, we should not be doing things ad hoc but rather should be implementing automation when we see things repeating themselves, learning from past experience and automating as much of the response as we can so that our analysts can focus on other things. Where this touches AI involves the use of algorithms called reinforcement learning (a topic currently being explored at Stanford University) – the development of algorithms that help interpret what’s going on, that can make sense of why people are making the decisions they are making.

Visual functions, meaning pattern or image recognition. The human mind sees light signals and interprets them – and turns that into a perception. This ability of interpretation also tells me where I should look next. From a SOC perspective, this relates to how we create a picture of what is happening. Observables are presented to analysts so they can compare patterns to other historical patterns and recognize a type of attack or identify a threat actor, as many threat actors have specific ways of attacking.

Higher mental functions, which include putting the puzzle pieces together, problem solving, thinking, planning, making judgement calls – these are all things that humans still must do, and these types of processes will probably remain the exclusive domain of human beings for a long time. But there are ways for humans to interact with machines and get their recommendations. There are aspects of these processes that machines do better. For example, look at IBM’s Watson: Watson might be very good at chess, and this is simply because Watson can understand all the chess moves available and see multiple steps ahead. Where AI will likely play in here within the context of the SOC is in cyber security risk assessment, bringing a lot of different inputs together and understanding the patterns. The way the interaction happens could potentially be a conversation, with questions being posed like:

- What is the probable next step of an attack? “Calculate where next steps might be so we can conduct threat hunting.”

- What is the potential target of an attack? “Figure out if the threat actors are looking for data to steal – or if they are interested in identifying systems to disrupt – and understand their intent, so that we can direct next steps vis-à-vis defense.”

- Are there similar assets? “Has the threat actor attacked certain assets in the past and what similar assets are there within the organization – information that can help us figure out where the attacker is going.”

This is how AI can work together with the analyst in ways that are most useful to making security operations faster and more accurate – providing the kind of information might then drive the work of the human analyst.

Association area, i.e., where it all comes together. Within the context of security operations, this is where we take the various inputs and bring it together in a collaboration area. This is why development teams love chat tools – which are effective ways of bringing people together, where analysts can see everything in one place and look at a series of events that are happening. These kinds of tools allow us to present information tactically and using them effectively can have a huge impact in terms of shortening the handling time of an incident.

Cyber Security Strategy For Organizations Should Shift To Response Time

At CyberProof, most of our clients today understand that it really does not matter how much money you spend on prevention – cyber security threats include a real possibility that an attacker will successfully implement at attack. It’s not “if” but “when.”

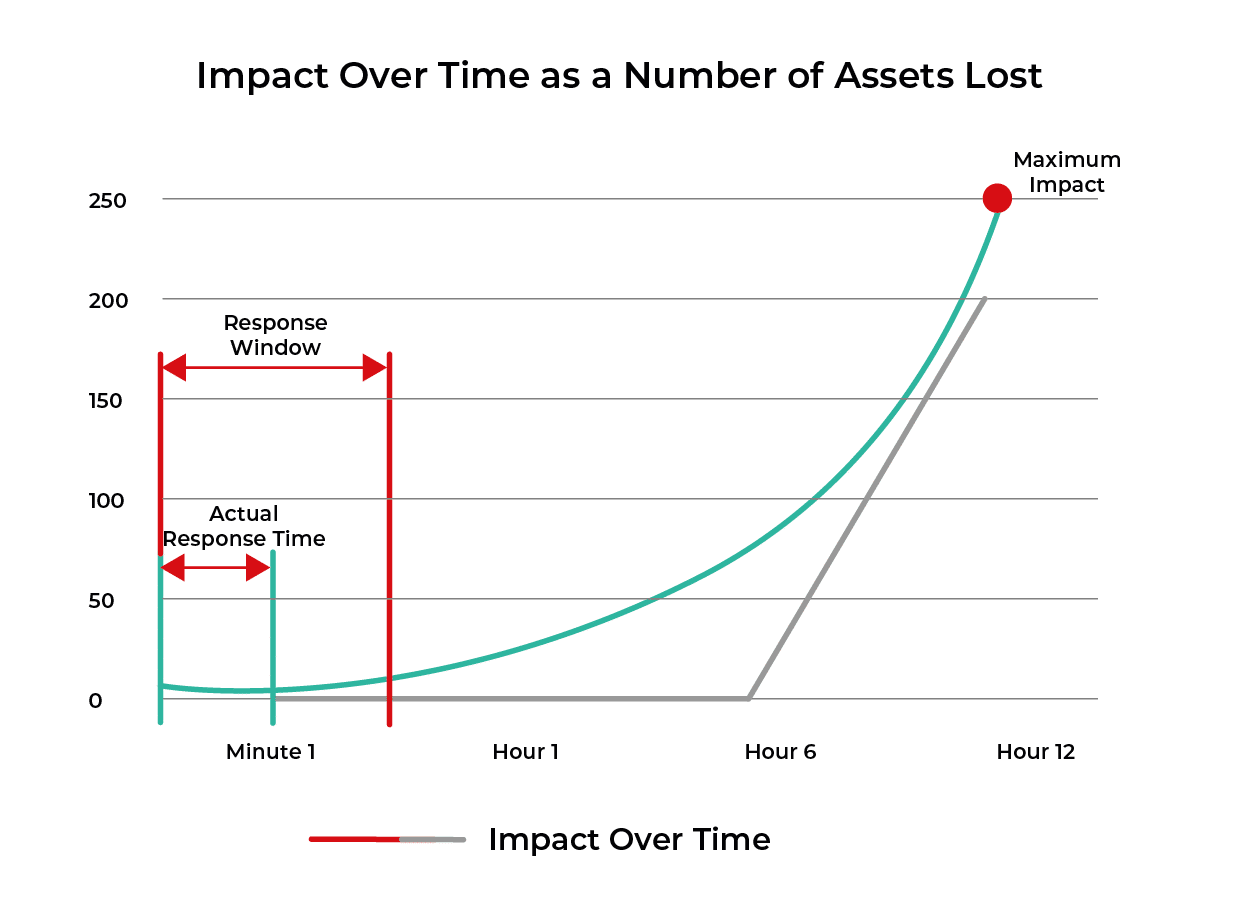

Security operations is, in and of itself, a preventive control – assuming important attacks may be reliably detected and contained fast enough. The risk of each attack type may be acceptable if contained within a certain period of time. We call this the response curve. What can security operations do to minimize the damage to a level that reduces the magnitude of loss?

For high priority attack types like data breach or business disruption, the magnitude of loss is well known. By leveraging these metrics, we can determine the degree of loss over time, i.e., the damage to the business if our systems go down for a minute, for a day, or for a week. The diagram below illustrates this point.

How to Use AI to Help Human Analysts Work Optimally

Today, most of us are well aware that there simply are not enough experienced cyber security analysts to meet today’s needs. In fact, in 2018-2019, 53 percent of organizations reported a “problematic shortage” of cyber security skills, according to CSO Online. This is a problem that is getting more extreme as the attack surface continues to grow – with more endpoints (IoT, OT), more threat actors, and more complexity around applications, cloud, and other frontiers.

Today, the adoption of AI has focused on prevention and anomaly detection, producing more alerts. Looking ahead, AI must focus on reducing the work of the overloaded cyber security analysts and reducing the time to detect and respond. AI must help humans make decisions to deal with the exploding number of alerts – fundamentally reducing the time to respond, and mitigating the damage caused by attack.

“According to Wikipedia AI is defined very simply as, “intelligence demonstrated by machines, in contrast to the natural intelligence displayed by humans.”