The 2024 U.S. presidential election continues to be a high-priority target for nation-state threat actors. Recent developments underscore the involvement of Iran, Russia, and China, all leveraging various tactics aimed at disrupting the electoral process and influencing public sentiment.

Emerging threats

- Coordinated Influence Operations: Both Russia and Iran are leveraging advanced disinformation techniques to manipulate public perception. These campaigns exploit existing divisions in U.S. society to inflame tensions around sensitive issues, aiming to destabilize the election process.

- Cyberattacks on Campaigns: Phishing, credential theft, and disinformation remain critical tools for foreign actors targeting both Republican and Democratic campaign infrastructures.

- AI-Driven Disinformation: The increasing sophistication of AI-driven disinformation campaigns is becoming a major threat, with state-sponsored actors leveraging AI to produce hyper-realistic deepfakes, automated narratives, and tailored propaganda. As AI improves its ability to analyze and mimic human behavior, malicious actors can target specific demographics with highly personalized disinformation, further undermining trust in reliable information sources.

Past attacks & techniques

In previous campaigns, notably during the 2020 U.S. elections, we also observed attacks from various nation-state actors, each with distinct strategic objectives. Russian interference was particularly aggressive, encompassing cyberattacks on organizations such as the Democratic National Committee (DNC) and widespread disinformation campaigns managed by groups like the Internet Research Agency (IRA). Meanwhile, Iran’s tactics included voter intimidation and the dissemination of false information through email spoofing and fabricated social media posts designed to sow confusion and undermine trust in the electoral process. On the other hand, China’s activities mainly involved cyber espionage, with efforts concentrated on collecting intelligence about political figures and promoting narratives beneficial to their geopolitical ambitions.

Current threats to the 2024 election

- Iranian Involvement – Mint Sandstorm (Charming Kitten):

The Trump campaign has disclosed that Mint Sandstorm, an Iranian state-sponsored group, breached its cybersecurity defenses, leading to the theft of sensitive documents. Mint Sandstorm, also known as Charming Kitten, is associated with the Islamic Revolutionary Guard Corps (IRGC) and is infamous for using phishing attacks and social engineering tactics to impersonate tech support on platforms like AOL, Google, Yahoo, Microsoft, and WhatsApp. This group targets individuals connected to both President Joe Biden’s and former President Donald Trump’s administrations. By stealing documents and potentially releasing manipulated versions, the Iranian operatives aim to create instability and exacerbate existing political divides within the U.S. The group’s historical focus has included targeting dissidents, media personnel, and human rights advocates, often exploiting polarizing societal issues such as racial tensions, economic inequality, and gender rights.

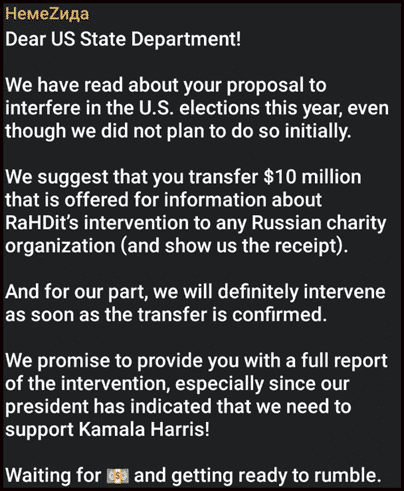

- Russian Threat Actor – RaHDit and the Doppelgänger Campaign:

The Russian group RaHDit, which has previously conducted cyberattacks against Ukrainian targets, recently announced intentions to interfere with the 2024 U.S. election. As result, U.S. authorities seized multiple domains tied to Doppelgänger, a sophisticated disinformation campaign driven by Russia to promote propaganda and disrupt global support for Ukraine. Since late 2023, the Doppelgänger campaign expanded from Ukrainian issues to target public opinion in the U.S., Israel, France, and Germany. It employs a broad network of social media accounts across platforms like TikTok, Instagram, YouTube, and Cameo to spread misleading narratives, mimicking legitimate news websites to confuse and mislead audiences. Key tactics include:

- Botnets and URL Redirection: These are used to disguise the origin of disinformation.

- Cloudflare’s CDN: The infrastructure is regularly updated to evade detection.

Russian Threat Actor RaHDit’s claim they will now try and interfere with the U.S. election.

- Chinese Influence Campaign – Spamouflage:

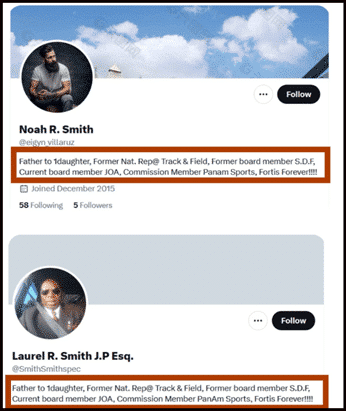

China’s Spamouflage operation continues to evolve as a significant threat to U.S. election integrity. This state-sponsored influence campaign, identified as supporting Chinese government interests, disseminates false narratives and misleading information through thousands of fake social media accounts. These profiles pretend to be American citizens, using hashtags like #american to build credibility and address divisive issues such as the Israel-Hamas conflict, gun control, racial disparities, and U.S. foreign policy. While many accounts were dismantled by platforms like Meta, Instagram, and WhatsApp, the reach of these disinformation efforts highlights China’s subtle yet strategic approach to shaping U.S. political discourse and undermining trust in democratic processes.

Chinese threat actor spreading disseminates false narratives and misleading information.

As we move closer to the 2024 election, U.S. election officials, campaigns, and technology providers must strengthen their defenses to counter these coordinated efforts by nation-state actors. The escalation in Iranian, Russian, and Chinese cyber activities demands a multi-faceted response to protect the integrity of the electoral process.

CyberProof’s recommendations

Implementing advanced cybersecurity measures is crucial for protecting campaign infrastructure against evolving threats. Multifactor authentication (MFA) and network segmentation are key defenses against phishing, credential theft, and unauthorized access. In tandem with bolstering security, there is an imperative need to collaborate with technology companies to boost public awareness and media literacy, empowering voters to discern disinformation and resist the influence of AI-driven deepfakes and manipulated content. Leveraging AI-based detection tools also plays a pivotal role in proactively identifying and mitigating the proliferation of fake accounts, bot-driven disinformation campaigns, and deepfake videos that spread through social media platforms.