This blog is part 3 of a three-part article on maturity models. For more information, read part 1 and part 2.

Performance and financial maturity

To really understand the importance of Performance and Financial Maturity, we must consider how other areas of the business are measured in terms of performance. For most, if not all, other areas of a business, the metrics are clear and easy to understand.

For example, Marketing is measured on opportunities created and public sentiment, Sales is measured on revenue generation and margin, Manufacturing is measured on output, productivity, and defect rate, etc. All other aspects of the business are also measured on how effectively they use their budget to achieve the goals of the business.

Now let’s contrast this to the commonly used single binary metric of the Security Department: did the company suffer a significant cyber-related loss – yes or no. In no other part of the business, would a single binary metric be considered an acceptable measure of performance.

Metrics for other business areas can be easily adapted to measure the performance of the Security Organization and begin the journey of Performance Maturity. Essential performance metrics are shown in Figure 5 below. Also note that if a metric or statistic isn’t influencing a business decision, you probably shouldn’t be taking the time and effort to collect it.

All metrics should be developed to answer five key questions for the business. These are:

1. Is it aligned to the business?

Are the technical security controls and processes designed to be aligned to the business, and effective at reducing risks to the business? This links directly to the first tenant of Business Alignment, and much of the evidence for these metrics are gathered from the Business Cyber Risk Assessment and the Cyber Risk Register. Effective management and governance in creating these two documents is essential to the company’s Enterprise Risk Management (ERM) program.

2. Are controls and processes working as designed?

Are the technical security controls and processes working as designed? “We don’t think we’ve been breached” is not the answer here.

Successful testing and validation are the correct answers to proving the assertion that the controls and processes are working as designed. There are two possible ways to approach testing. First, we could encourage malicious actors to attack our organization using all of the tactics and techniques that we’ve envisioned and see how our defenses hold up. You’ve probably guessed that this isn’t the best option!

The far better option is comprehensive testing and audit. Effective validation through testing must be a well-planned, well-coordinated combination of vulnerability scanning, attack surface mapping, and penetration testing. Audit is then layered on top of testing to ensure that the processes as designed and documented are being executed accurately and consistently throughout the organization.

Critical note: penetration testing and auditing should be a combination of internal and external teams. There are two reasons for this: expectation error, and independence. Expectation Error asserts that a person will see what they expect to see and test what they know about. It’s the reason proofreaders and editors exist. Independence and lack of conflict of interest may seem obvious, until you look at how many CISOs, ISMS teams, and auditors report to people like the CIO. It’s an obvious conflict of interest to audit your boss’s organization, but it’s also very common. Also, keep in mind that sample size and duration must be evaluated to yield the desired confidence in the findings.

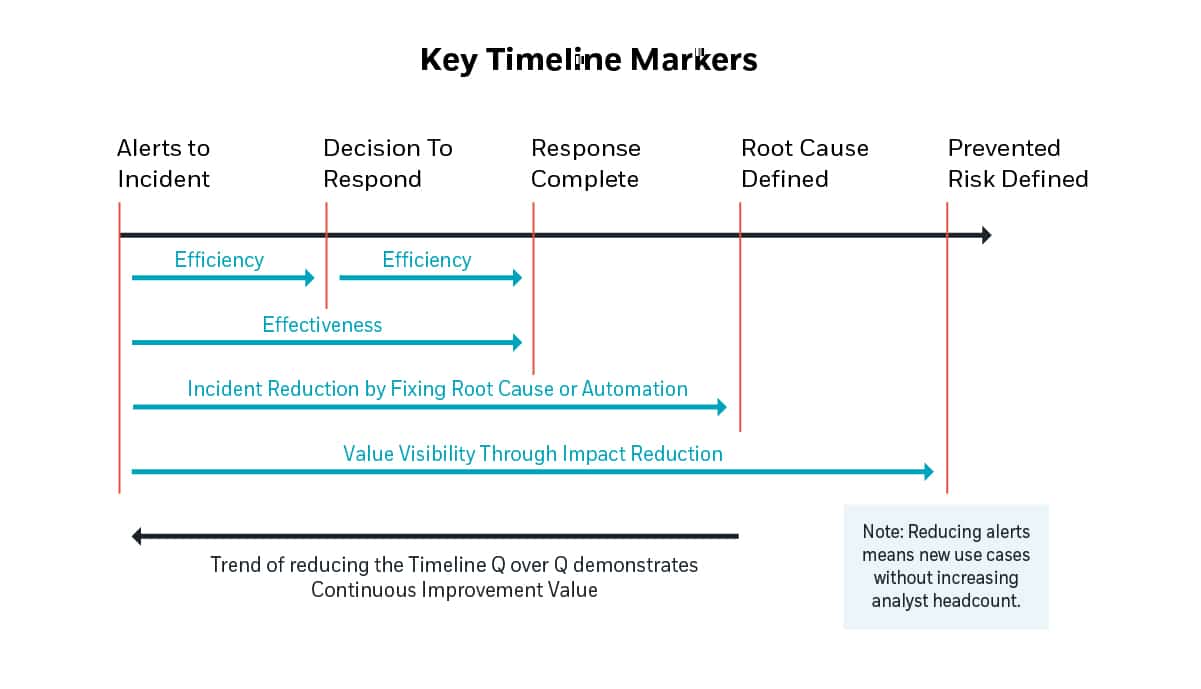

Lastly, one often neglected component of this measurement is incident response/containment time. Tracking the organization’s Incident Response performance is a critical measurement of performance and provides an opportunity to get it right before a high impact incident!

3. Does Security Operations function efficiently?

This is a particularly interesting challenge in that SOCs and the tools they use often do not provide the metrics and statistics we need to validate this aspect of performance. Below are some core metrics that help us measure efficiency.

-

Use Case build time. This is the elapsed time from awareness of a new detectable technique (or the decision to look for a technique) until the deployment of a fully functioning Use Case. By fully functioning, I mean the combination of detection capability and a planned response that meets the response requirement indicated by the incident impact curve associated with the technique’s mapped incident.

-

Time to automate. This measures the organization’s ability to recognize a repeating alert (detection) that has a common response, and automating that response. Note that in a modern SOC, automation is absolutely critical to handling the ever-expanding threatscape, and the ever-increasing volume of log sources, events, and alerts.

-

Alert Assessment Time. This represents the duration of time from alert generation until a decision is made classifying the alert as False Positive (FP) or Actionable Incident (TP – true positive). This Triage Time can be greatly reduced by incident recognition automation, and the automation of alert enrichment to assist in the analyst decision process.

-

Incident Response/Containment Time. We need to not only ensure that the response to an incident is correct (per item c above), but that it was fast enough to limit any losses to an acceptable level (reference the Impact Curve concept). This is sometimes erroneously measured from the time that an alert is in fact an actionable incident until the incident is mitigated/contained. A more appropriate measurement, however, starts at the point that the alert was generated (assuming that the detection system operates in near real time to the triggering events). The impact of an incident isn’t on hold just because we haven’t recognized it as an incident yet! This is another area where automation is key.

-

Percent of total Use Cases leveraging automation. We can also look at the percent that are fully automated and the percent of automation across all Use Cases.

-

Other useful metrics. These may include percent of alerts fully managed by L1 analysts, percent of alerts escalated to L2, trend of false positives (FPs) escalated to L2, percent of alerts resulting in infrastructure/configuration/process change, and percent of alerts derived from threat intelligence sources.

4. Is Security Operations improving?

Is Security Operations getting better – more effective and more efficient – over time? An obvious place to start here is taking all of the efficiency metrics mentioned in question 3, above, and tracking them over time to yield a trend. However, a few other metrics that are useful in assessing and measuring the continuous improvement program include:

Figure 5 Critical performance metrics

How well are we using incident root cause analysis to upgrade our controls and reduce incoming alerts and incidents? We can do this by correlating recurring incidents to control changes, and the subsequent reduction or elimination of the alerts that were generating those incidents. A high correlation indicates that we’re learning from evidence and advancing out controls’ capability in response.

5. Is Security Operations managing their budget responsibly?

Last, but certainly not least, is the issue financial responsibility. This can also be called Spend Maturity, or a measure of how effective and efficient the organization is with the investment made in security.

Infrastructure Rationalization is the first step. Some of the questions that need to be answered here are:

-

Are there overlapping expenditures on multiple tools and processes that provide the same or similar controls?

-

Exactly how much control redundancy is required?

-

What is the due diligence process for determining “best value” product selection? Is it standardized?

-

Is the organization focusing controls based on risk or based on general coverage of controls framework? Remember, ISO27001 states that controls should be implemented not just because they are available, but because they produce a measurable return on investment based on risk reduction.

-

Is the organization focusing on controls that enable the business, or controls that impede business operations? If controls are not business aligned, they are likely to be circumvented or become shelf-ware. Conversely, business aligned controls that provide a competitive advantage are more willingly embraced by the organization.

Bottom line: Communicate clearly and transparently in business terms

In summary, value-driven security governance enables security operations to be viewed as an integral part of the business engaged at the strategic, as well as tactical level. To do this, security operations leaders must communicate clearly and transparently in business terms, build trust, demonstrate the value of alignment, and provide executive reporting aligned to the goals of the business.

This blog is part 3 of a three-part article on maturity models. For more information, read part 1 and part 2.

Interested in learning more about best practices in security governance? Speak with an expert.